The Crucial Role of Transformers in Grid Efficiency and Stability

When you flip a switch, charge your phone, or power up industrial equipment, you’re tapping into one of the greatest engineering achievements in human history: the electrical grid. Behind this vast network of power plants, substations, and transmission lines lies a component that rarely gets the attention it deserves — the transformer.

From massive substation units to the quiet green boxes in residential neighborhoods, transformers are the unsung heroes of modern power systems. They do far more than simply adjust voltage. They enable efficient long-distance transmission, enhance grid stability, and act as a critical line of defense against catastrophic failures.

In this article, we’ll explore how transformers underpin grid efficiency and stability — and how advanced modeling tools like ETAP help engineers visualize and optimize their performance.

How Transformers Work: A Quick Primer

The Principle of Electromagnetic Induction

At the heart of every transformer lies the principle of electromagnetic induction. When alternating current (AC) flows through the primary winding, it creates a changing magnetic field within the transformer’s iron core. This fluctuating magnetic field induces a voltage in the secondary winding wrapped around the same core.

The key requirement? The current must be alternating. A steady direct current (DC) would produce a constant magnetic field — and without a changing field, no voltage would be induced.

Stepping Up and Stepping Down Voltage

The brilliance of a transformer lies in its simplicity. The ratio of turns between the primary and secondary windings determines whether voltage is increased or decreased:

- More turns on the secondary winding → Step-up transformer → Higher voltage

- Fewer turns on the secondary winding → Step-down transformer → Lower voltage

This controlled voltage transformation is essential for both efficient power transmission and safe energy consumption.

Transformers and Grid Efficiency: Minimizing Energy Loss

The Challenge: I²R Losses

All transmission lines have resistance. When current flows through them, energy is lost as heat — a phenomenon known as I²R loss. Over long distances, these losses can become substantial.

Without voltage transformation, much of the electricity generated at power plants would dissipate before ever reaching consumers.

The Solution: High-Voltage Transmission

Power is defined as:

P = V x I

For a fixed amount of power, you can transmit:

- High voltage and low current, or

- Low voltage and high current

Since transmission losses depend on the square of current (I²R), reducing current dramatically reduces losses.

This is where transformers become indispensable. Step-up transformers increase voltage to hundreds of kilovolts for transmission, drastically lowering current and minimizing energy loss. Near consumers, step-down transformers safely reduce voltage for industrial and residential use.

Modeling Efficiency with ETAP

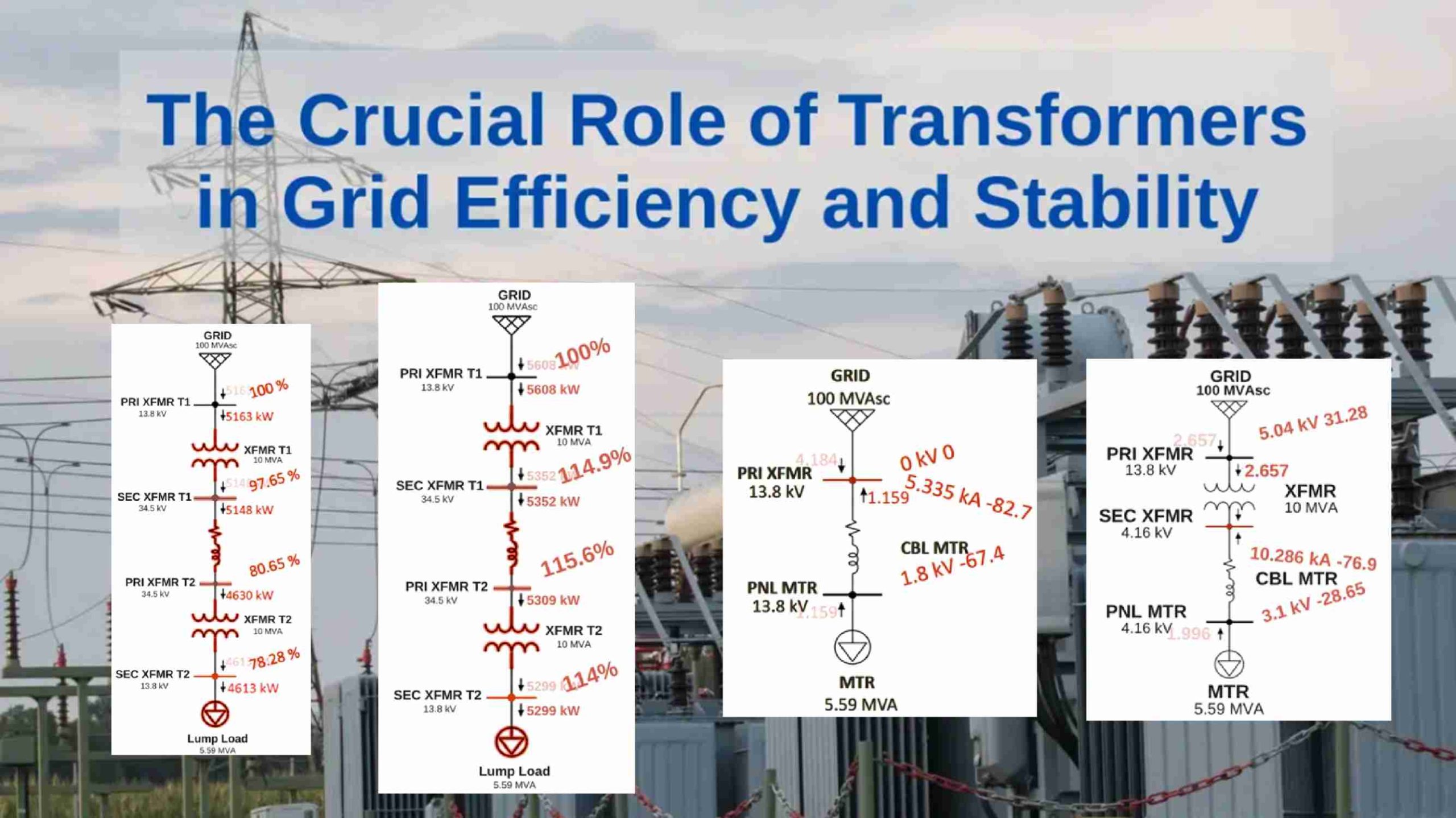

Using ETAP (Electrical Transient Analyzer Program), we modeled two transmission scenarios over a 100 km line to visualize the impact of voltage levels.

Scenario 1: Low-Voltage Transmission (Less Efficient)

- Voltage stepped up from 13.8 kV to 34.5 kV

- Power before transmission: 5.148 MW

- Power after transmission: 4.630 MW

- Loss: 0.518 MW

Significant energy was lost due to higher current levels.

Scenario 2: High-Voltage Transmission (Highly Efficient)

- Voltage stepped up from 13.8 kV to 230 kV

- Power before transmission: 5.352 MW

- Power after transmission: 5.309 MW

- Loss: 0.043 MW

That’s nearly 12 times less power loss compared to the first scenario.

This side-by-side comparison clearly demonstrates why high-voltage transmission — enabled by transformers — is fundamental to modern power systems.

Beyond Efficiency: Transformers and Grid Stability

Transformers do far more than improve transmission efficiency. They are vital for grid protection, fault containment, and system stability.

Limiting Short-Circuit Currents

When faults occur — whether from lightning strikes, equipment failure, or human error — dangerously high short-circuit currents can flow through the system.

A transformer’s internal impedance (%Z) acts as a bottleneck, limiting fault current magnitude and isolating disturbances. This containment prevents widespread equipment damage and cascading outages.

Using ETAP simulations, we compared two fault scenarios:

Without Transformer

- Short-circuit power: 127.5 MVA at 13.8 kV

- Voltage collapsed at the fault location

- High fault current stressed the system

With Transformer

- Short-circuit power reduced to 74.2 MVA at 4.16 kV

- Over 40% reduction in fault power

- Improved voltage stability across the grid

This demonstrates how transformer impedance plays a critical role in preserving system integrity.

Protection, Coordination, and Arc Flash Reduction

Selective Protection

Transformers provide electrical isolation between primary and secondary windings. This isolation allows engineers to design selective protection schemes using fuses, relays, and circuit breakers.

When properly coordinated, only the device closest to a fault trips — keeping the rest of the grid operational.

Reducing Arc Flash Hazards

Arc-flash incidents occur when high-current arcs through the air, producing intense heat and pressure. By limiting fault current, transformers reduce available incident energy — significantly improving worker safety and protecting equipment.

The Future of Transformers: Smart and Connected

As the grid evolves, so must its backbone components.

Smart Transformers

Modern transformers are becoming intelligent assets equipped with sensors and communication systems. Unlike traditional passive devices, smart transformers provide real-time operational data such as:

- Winding and oil temperature

- Vibration levels

- Partial discharge activity

This data feeds advanced analytics and machine learning systems that enable predictive maintenance, reducing downtime and extending asset lifespan.

Renewable Integration and Microgrids

Renewable sources like wind and solar introduce variability into the grid. Smart transformers dynamically adjust voltage levels and communicate with other grid components to maintain stability.

They are also essential in microgrids, enabling localized generation systems — such as hospitals or data centers — to operate independently during outages.

The Backbone of a Reliable Power System

From efficient long-distance transmission to fault containment and renewable integration, transformers are foundational to modern power infrastructure.

But simply installing a transformer isn’t enough. Its performance must be thoroughly analyzed and optimized through detailed engineering studies, such as:

- Load Flow Analysis

- Short Circuit Studies

- Arc Flash Analysis

- Protection & Coordination

- Transient Stability Studies

When properly designed and modeled, transformers ensure reliability, safety, and performance across the entire electrical network.

Final Thoughts

Transformers may not always be visible, but their impact is everywhere. They make large-scale power generation practical, keep our systems stable during faults, and enable the transition toward smarter, more sustainable grids.

The next time you switch on a light, remember: behind that simple action lies a carefully engineered system — and at its core, the humble yet powerful transformer.